When it comes to hardware performance benchmark cheating, this is a sensitive and controversial topic that many digital hardware enthusiasts are familiar with. It’s commonly seen in areas such as smartphone processors, photography (cameras), desktop processors, and graphics cards.

The cheaters could be hardware manufacturers, third-party evaluation agencies, or individual review bloggers who go to great lengths to improve the benchmark scores of certain hardware (most commonly processors).

There are various cheating methods, including the following:

- Putting the phone in the fridge to run benchmarks: Under normal room temperature conditions, when a phone runs benchmarking software, it operates under high load, which increases its temperature, causing the processor to automatically throttle down and reduce performance. Placing the phone in a fridge before running the benchmarks can significantly mitigate the processor’s temperature increase and automatic throttling, naturally resulting in higher benchmark scores.

- Forced overclocking: When running benchmark software, some manufacturers deliberately increase the processor’s working frequency to operate beyond its normal performance limit, thereby inflating the benchmark scores. Generally, the runtime of various benchmarking software is not long, typically within a few minutes. If managed properly, the processor can just about handle it without showing any obvious signs of stress.

- Opportunistic and targeted “optimization”: Typically, whether for smartphone processors, cameras, desktop processors, or graphics cards, the industry follows a set of mature and standardized procedures for evaluating performance, including specific test items and steps. These standards are public. In response, some manufacturers may resort to dubious tactics, taking “shortcuts” by implementing measures specifically designed to “optimize” for these particular test items and steps, thereby achieving higher scores.

At this point, it would be reasonable to list some real-world examples of benchmark cheating that have been discovered, but due to the sensitivity of the matter, many seasoned digital hardware enthusiasts are likely already familiar with such cases, so we will refrain from listing them here. Please understand.

So, what does “controversial” mean in this context? Because some of these practices are in a gray area, it’s often difficult to define them clearly.

Objectively speaking, manufacturers taking certain “optimization” measures to improve performance in specific application scenarios have a degree of legitimacy and reasonableness, and it can’t be outright labeled as “cheating.” The evaluation of such behavior ultimately depends on the motives and purposes behind the manufacturers’ actions.

If the measures taken by manufacturers can improve scores while also genuinely enhancing the actual user experience, then this falls under “reasonable optimization.” Conversely, if the measures only improve scores without enhancing the actual user experience, then this is considered “malicious cheating.” There is only a fine line between reasonable optimization and malicious cheating.

The various cheating methods I’ve mentioned are prevalent in the consumer product realm, but this phenomenon also exists in the commercial server-grade processor sector. Recently, the well-known server-grade processor performance evaluation organization, SPEC (see below), stated that it will no longer accept performance test results for Intel processors that have been optimized using a specific version of the Intel compiler.

The statement might be a bit convoluted and difficult for the average reader to understand, so let me explain:

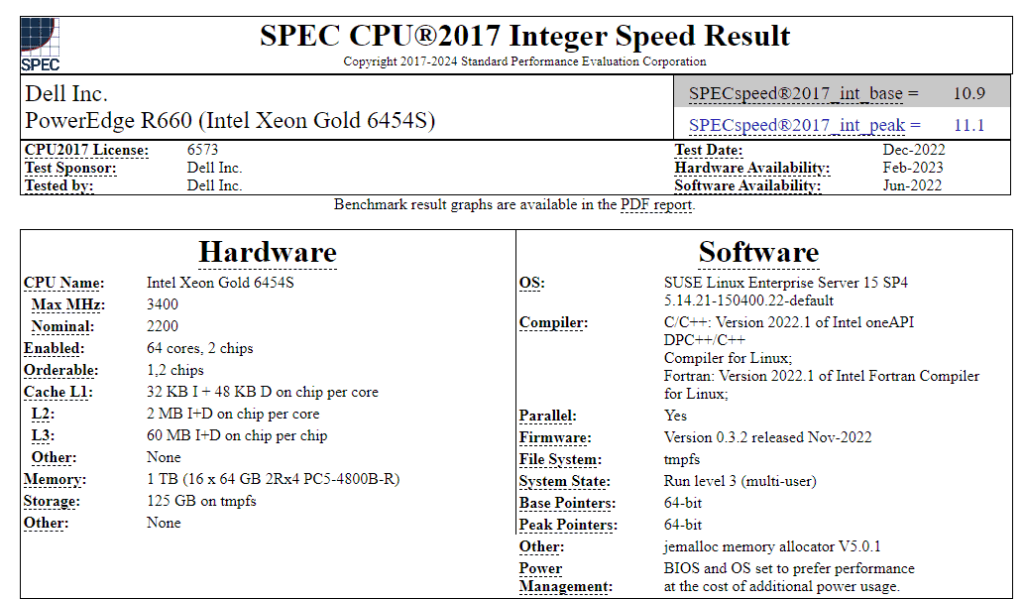

SPEC CPU 2017 is a professional-grade benchmark test widely recognized in the industry for high-end servers, data centers, and workstations. This test employs various standardized and rigorous testing methods and procedures to ensure that the results are objective and credible, and it has a good reputation in the industry.

The organization publicly releases and provides the procedural standards for conducting this test. It allows third-party evaluation agencies or individuals to perform evaluations according to these standards and then submit their results to SPEC. Once approved, SPEC incorporates these evaluation results into its database and publishes them on its official website, making them available for other users to reference and compare.

The performance evaluation results of SPEC CPU 2017 depend not only on hardware but also on software, with the compiler playing a key role. A compiler is a type of program code that can optimize and enhance the processor’s operational performance.

Recently, SPEC discovered during the review of various benchmark test reports submitted by third parties that 2,600 benchmark tests had engaged in speculative “optimization” for a specific version of the Intel compiler, primarily involving test results for the Intel Xeon Sapphire Rapids processors.

“Optimization for a specific version of the Intel compiler” aims and nature are similar to the third example previously listed by the author, which is to increase benchmark scores. These “optimization” measures do not help improve the final actual work performance, and the resulting benchmark scores do not accurately reflect the performance of these processors, essentially amounting to cheating.

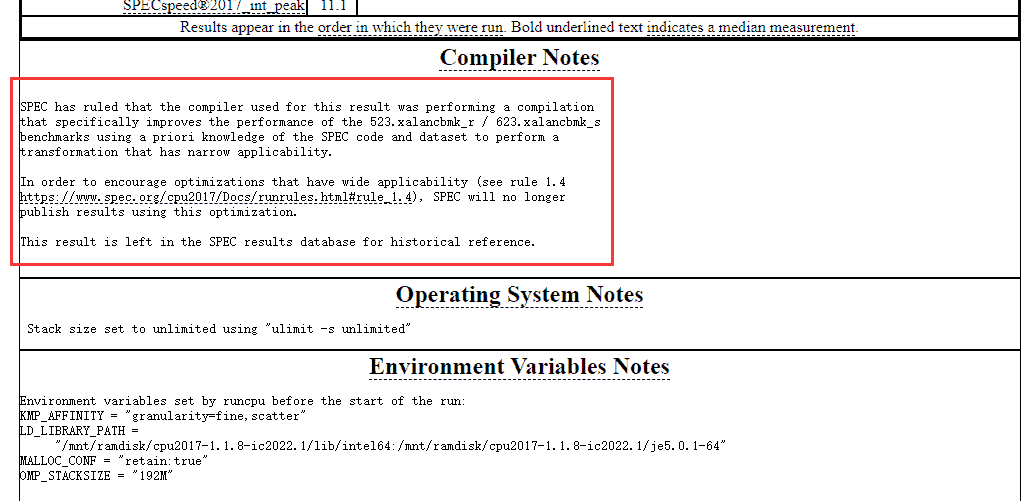

SPEC’s response to these 2,600 problematic benchmark tests was relatively mild. Instead of taking strong measures like deleting the results or banning accounts, they added a rather diplomatic explanation to the results, as shown in the following image.

The main point of the explanation is as follows:

The benchmark test was optimized for a narrowly applicable compiler. To encourage evaluation agencies to adopt optimization schemes with broad applicability, SPEC will no longer accept or recognize benchmark tests conducted with narrowly applicable optimization schemes. These results (which will not be deleted) will still be retained in SPEC’s evaluation results database as a historical reference.

SPEC’s statement was very polite, saving face for the submitters of these benchmark tests without directly calling it “cheating,” but essentially amounts to declaring these evaluation results as invalid, equivalent to cheating.

This is one of the few instances in the industry where an evaluation organization has publicly revealed that some benchmark tests are unreliable and “inflated.” SPEC’s objective and responsible attitude is highly commendable.

Due to various reasons, it’s challenging to eliminate the practice of inflating or cheating in performance evaluations of various hardware. Therefore, when viewing various evaluation scores, one should not take them at face value but should look at results from several different sources and approach them rationally.

Related:

Disclaimer:

- This channel does not make any representations or warranties regarding the availability, accuracy, timeliness, effectiveness, or completeness of any information posted. It hereby disclaims any liability or consequences arising from the use of the information.

- This channel is non-commercial and non-profit. The re-posted content does not signify endorsement of its views or responsibility for its authenticity. It does not intend to constitute any other guidance. This channel is not liable for any inaccuracies or errors in the re-posted or published information, directly or indirectly.

- Some data, materials, text, images, etc., used in this channel are sourced from the internet, and all reposts are duly credited to their sources. If you discover any work that infringes on your intellectual property rights or personal legal interests, please contact us, and we will promptly modify or remove it.