As a traditional storage solution, SRAM (Static Random Access Memory) has recently ignited the A-share semiconductor sector for two consecutive days. On February 21st, SRAM concept stocks surged again, with West Test Data hitting the 20CM limit, and stocks like Beijing Junzheng and Hengshuo Shares rising more than 10% at one point during the session, followed by gains in Wanrun Technology, Oriental Science, and others.

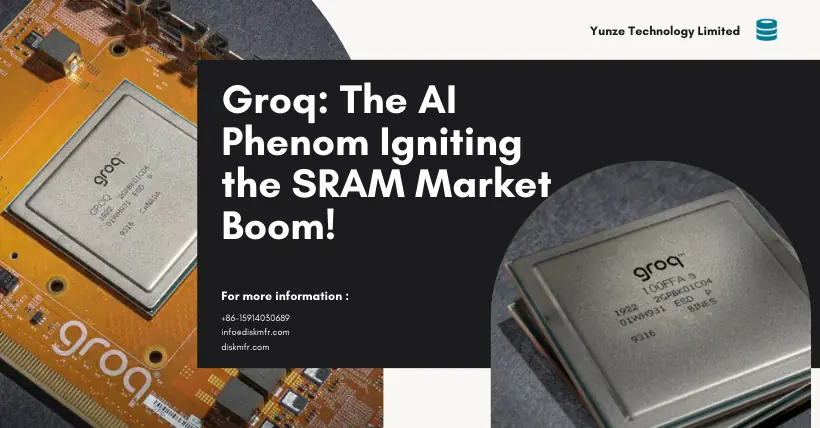

On the news front, Groq, founded by Jonathan Ross, the designer of the first generation of Google TPU, officially announced that its new generation LPU has doubled the inference speed compared to GPU in several public tests, at nearly the lowest price. Moreover, results from three-party tests have shown that this chip significantly optimizes the inference of large language models, with speeds up to 10 times faster than Nvidia’s GPU. Unlike GPUs, LPU memory uses SRAM.

Groq’s large model inference chip is introduced as the world’s first LPU (Language Processing Unit) solution, a chip based on the new TSA architecture called Tensor Streaming Processor (TSP), designed to enhance the performance of compute-intensive workloads such as machine learning and artificial intelligence.

Although Groq’s LPU does not use more expensive cutting-edge process technologies but opts for a 14nm process, its self-developed TSA architecture allows for a high degree of parallel processing capability, handling millions of data streams simultaneously. The chip also integrates 230MB of SRAM to replace DRAM, ensuring memory bandwidth, with an on-chip memory bandwidth of up to 80TB/s.

According to official data, Groq’s LPU chip performs exceptionally well, offering up to 1000 TOPS (Tera Operations Per Second) of computational power, and in some machine learning models, its performance can be 10 to 100 times higher than conventional GPUs and TPUs.

Groq states that cloud servers based on its LPU chip far exceed those based on NVIDIA AI GPUs like ChatGPT in computation and response speeds, generating up to 500 tokens per second, compared to the public version of ChatGPT-3.5, which can only generate about 40 tokens per second.

Since ChatGPT-3.5 primarily relies on NVIDIA’s GPU, this means Groq’s LPU chip’s response speed is more than 10 times faster than NVIDIA’s GPU.

Groq claims that compared to the large model inference performance of other cloud platform manufacturers, cloud servers based on its LPU chip ultimately achieved performance 18 times faster.

However, whether SRAM can completely revolutionize the industry in the short term remains to be seen, with many storage industry professionals answering: No!

It is understood that memory is mainly divided into DRAM (Dynamic Random Access Memory) and SRAM (Static Random Access Memory). Currently, HBM is a widely used high-performance DRAM in the AI chip field.

Compared to DRAM, SRAM’s advantage is its fast speed (high access speed), but its drawback is also clear: it’s too expensive.

According to Groq, an LPU equipped with an SRAM has a memory capacity of 230MB, and the price of an LPU card exceeds $20,000.

Industry experts have calculated that due to Groq’s small memory capacity, running the Llama-2 70b model often requires 350 Groq cards, while Nvidia’s H100 only needs 8 cards.

In terms of price, at the same throughput, Groq’s hardware cost is 40 times that of H100, and the energy consumption cost is 10 times. This cost-effectiveness means that SRAM cannot yet revolutionize HBM, and LPU is not enough to revolutionize GPU. Therefore, some believe that GPUs and HBM are still the best solutions to meet AI needs today.

Industry insiders bluntly state that Groq’s approach is unlikely to be used on a large scale in the short term, and thus, it cannot revolutionize GPUs.

Related:

- AI Revolution: Windows Task Manager to Integrate NPU

- Why Edge AI Systems Rely on SRAM Compute in Memory

Disclaimer: This article is created by the original author. The content of the article represents their personal opinions. Our reposting is for sharing and discussion purposes only and does not imply our endorsement or agreement. If you have any objections, please get in touch with us through the provided channels.