NVIDIA’s H100 AI chip has made it a multi-billion dollar company that could be worth more than Alphabet and Amazon. While competitors have been playing catch-up, perhaps NVIDIA is on the verge of expanding its lead – with its new Blackwell B200 GPU and GB200 “Superchip”.

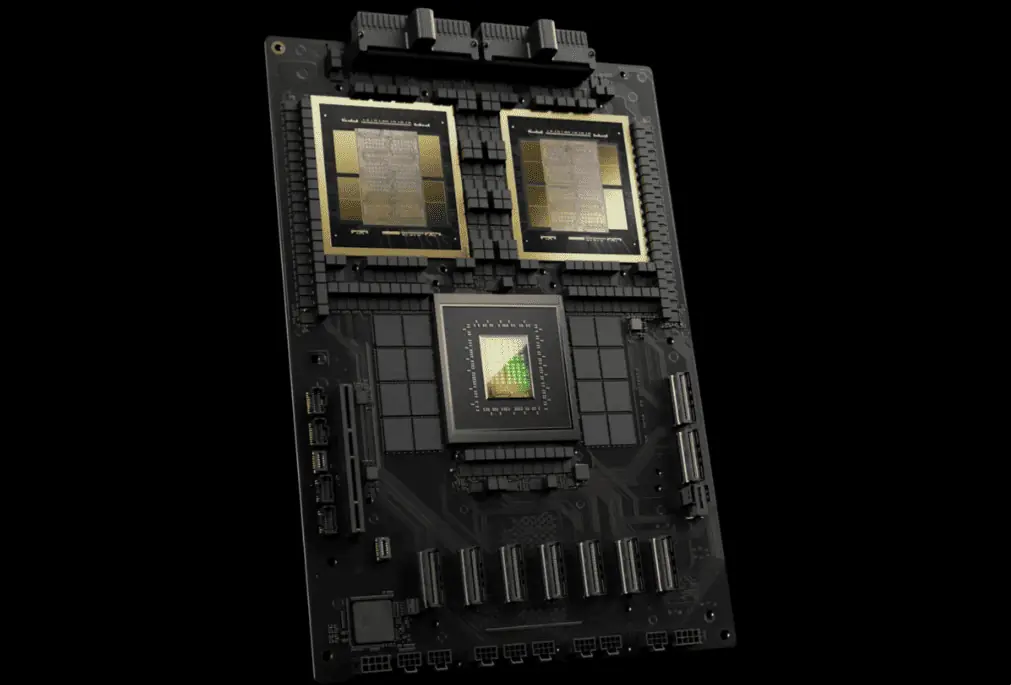

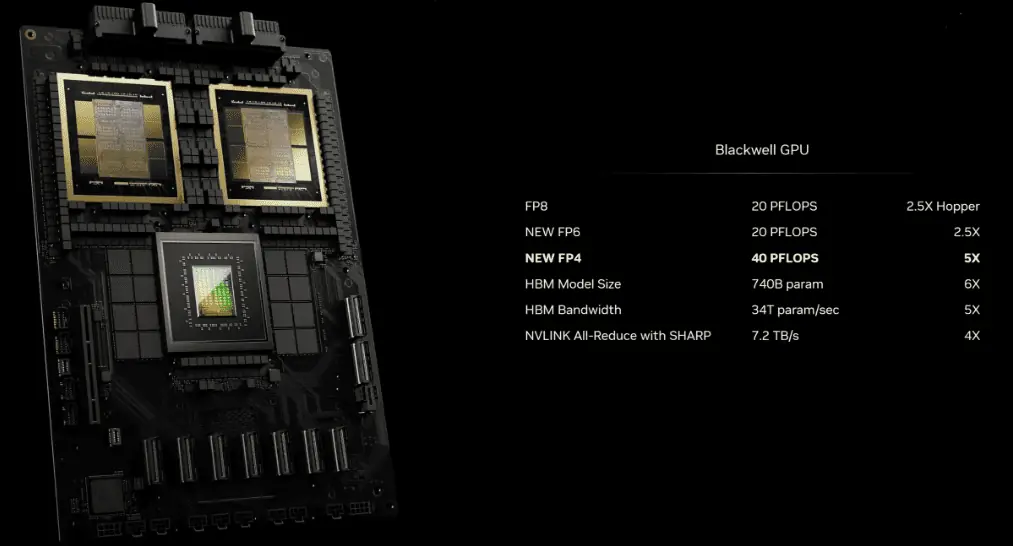

NVIDIA says the new B200 GPU has 208 billion transistors and delivers up to 20 petaflops of FP4 computation, while the GB200 combines two GPUs with a Grace CPU to deliver 30x the performance of LLM inference workloads, with the potential for much greater efficiency. NVIDIA says it can reduce cost and energy consumption by “up to 25x” compared to H100.

NVIDIA claims that training a 1.8 trillion parameter model previously required 8,000 Hopper GPUs and 15 megawatts of power. Today, NVIDIA’s CEO says 2,000 Blackwell GPUs can do the job, using just 4 megawatts of power.

NVIDIA says that in the GPT-3 LLM benchmark with 175 billion parameters, the GB200 performed seven times faster than the H100, and NVIDIA says it trained four times faster than the H100.

NVIDIA describes one of the key improvements as the adoption of a second-generation transformer engine that doubles the computational power, bandwidth, and model size (the aforementioned 20 petaflops for FP4) by using four bits per neuron instead of eight. The second key difference only occurs when a large number of GPUs are connected: the next-generation NVLink switch allows 576 GPUs to be connected, with a bi-directional bandwidth of 1.8 terabits per second.

This required NVIDIA to build a new network switch chip with 50 billion transistors and some of its onboard compute: NVIDIA says it has 3.6 teraflops of FP8 processing power.

Previously, NVIDIA says, clusters of 16 GPUs spent 60 percent of their time communicating with each other, and only 40 percent of their time doing actual computation.

Of course, NVIDIA is also counting on companies to buy these GPUs in bulk and package them into larger designs like the GB200 NVL72, which combines 36 CPUs and 72 GPUs into a single liquid-cooled rack for a total of 720 petaflops of AI training performance or 1,440 petaflops (aka 1.4 exaflops) of inference performance. It has nearly two miles of internal cabling with 5,000 individual cables.

Each tray in the rack contains either two GB200 chips or two NVLink switches, with 18 of the former and nine of the latter in each rack. Nvidia claims that one of the racks can support a total of 27 trillion parameter models. The GPT-4 is rumored to have about 1.7 trillion parameter models.

The company says Amazon, Google, Microsoft, and Oracle all have plans to offer NVL72 racks in their cloud service offerings, but it’s not clear how many they’ll buy.

Of course, NVIDIA is happy to offer companies other solutions as well. Here’s the DGX Superpod for the DGX GB200, which combines eight systems into one for a total of 288 CPUs, 576 GPUs, 240TB of RAM and 11.5 exaflops of FP4 compute power.

NVIDIA says its systems are scalable to tens of thousands of GB200 Superchips and are connected to 800Gbps networks via its new Quantum-X800 InfiniBand (up to 144 connections) or Spectrum-X800 Ethernet (up to 64 connections).

We don’t expect to hear anything about new gaming GPUs today, as the announcement was made at NVIDIA’s GPU Technology Conference, which usually focuses almost entirely on GPU computing and AI, not gaming. However, likely, the Blackwell GPU architecture will also provide the arithmetic for future RTX 50-series desktop graphics cards.

Related:

Disclaimer:

- This channel does not make any representations or warranties regarding the availability, accuracy, timeliness, effectiveness, or completeness of any information posted. It hereby disclaims any liability or consequences arising from the use of the information.

- This channel is non-commercial and non-profit. The re-posted content does not signify endorsement of its views or responsibility for its authenticity. It does not intend to constitute any other guidance. This channel is not liable for any inaccuracies or errors in the re-posted or published information, directly or indirectly.

- Some data, materials, text, images, etc., used in this channel are sourced from the internet, and all reposts are duly credited to their sources. If you discover any work that infringes on your intellectual property rights or personal legal interests, please contact us, and we will promptly modify or remove it.