When talking about NVIDIA’s RTX 40 series graphics cards, many people are aware that this generation has added a significant new feature, DLSS 3.0 (commonly known as “Popeye”), which enhances the game’s graphics and performance through AI.

Similarly, AMD has a comparable technology, FSR 3.0. However, since AMD has always been at a disadvantage in the graphics card competition, this technology has not been as popular as DLSS 3.0.

This article will focus on a major hardware-demanding game, “The Last of Us Part I,” using the Radeon RX 7800 XT graphics card, to compare and validate AMD’s FSR 3.0 technology and assess its actual performance.

01

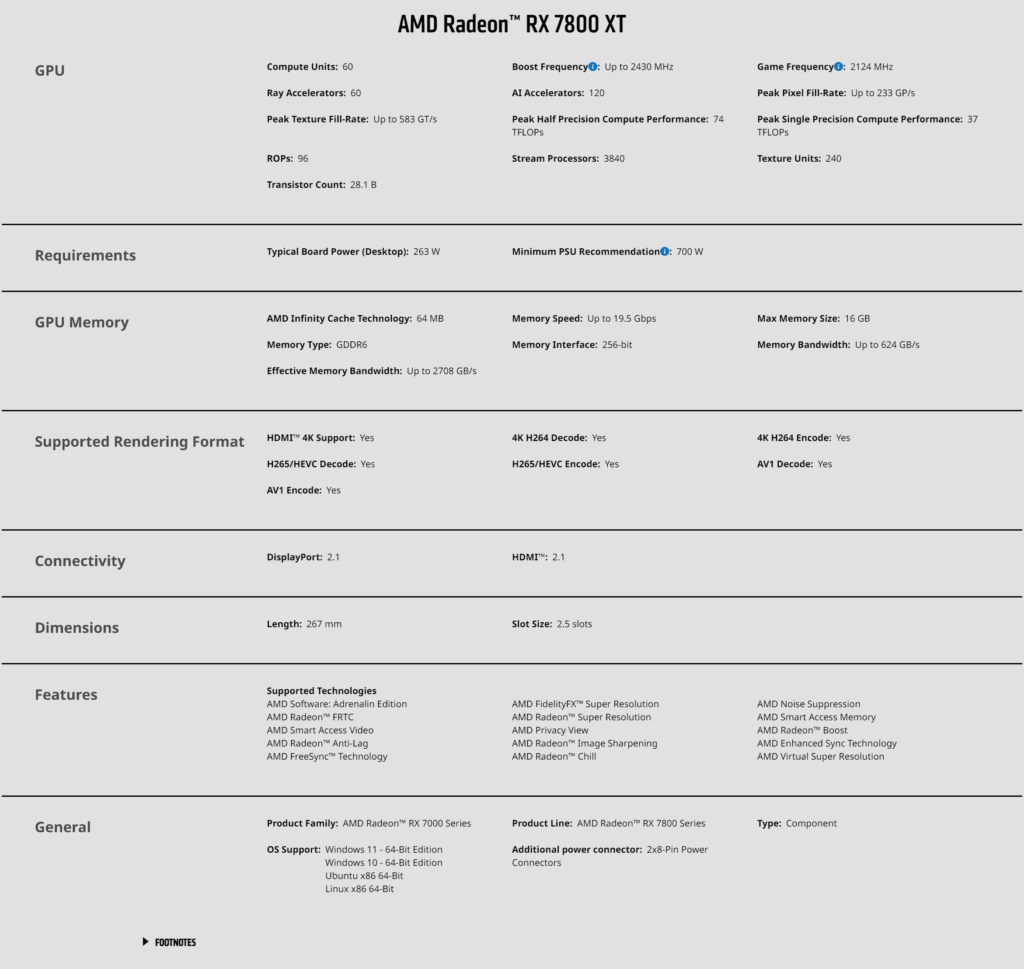

Test Platform Configuration

The main configuration is as follows:

The processor is Core i9-13900K, the motherboard is ASUS ROG Strix Z790-E Gaming, the memory is 32GB DDR5 6000MHz, the graphics card is Radeon RX 7800 XT 16GB, the hard drives are two Samsung 970s, and the operating system is Windows 11.

02

Game Benchmark Test

The following two sets of comparative tests are conducted for the game “The Last of Us Part I,” focusing on 1440P and 4K graphics modes. The comparisons are made between native graphics mode and when the FRS (Frame Rate Scaling) feature is enabled.

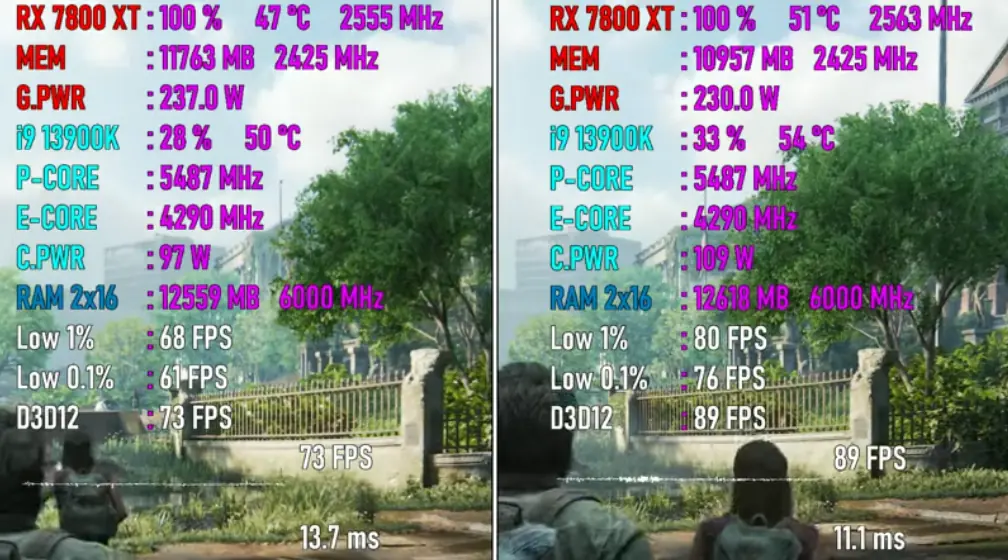

① 1440P Graphics Test

Platform A represents 1440P native graphics, while Platform B represents 1440P with FRS set to quality mode.

Platform A

- Average FPS: 73

- Minimum Instantaneous FPS: 68

- Frame time: 13.7ms

- CPU usage: 28%

- CPU power consumption: 97W

- CPU temperature: 50 degrees

- Memory usage: 12.6GB

- GPU usage: 100%

- VRAM usage: 11.8GB

- GPU power consumption: 237W

- GPU temperature: 47 degrees

Platform B

- Average FPS: 89

- Minimum Instantaneous FPS: 80

- Frame time: 11.1ms

- CPU usage: 33%

- CPU power consumption: 109W

- CPU temperature: 54 degrees

- Memory usage: 12.6GB

- GPU usage: 100%

- VRAM usage: 11.0GB

- GPU power consumption: 230W

- GPU temperature: 51 degrees

From these tests, it’s observed that after enabling FRS, CPU usage increased by 5%, with a slight increase in power consumption and a 4-degree increase in temperature. Enabling FRS did not significantly increase memory usage, indicating that the performance boost is primarily provided by the GPU. GPU usage remained at 100% in both modes, with VRAM usage decreasing by 0.8GB after enabling FRS. The overall performance with FRS is about 1.2 times that of the native mode.

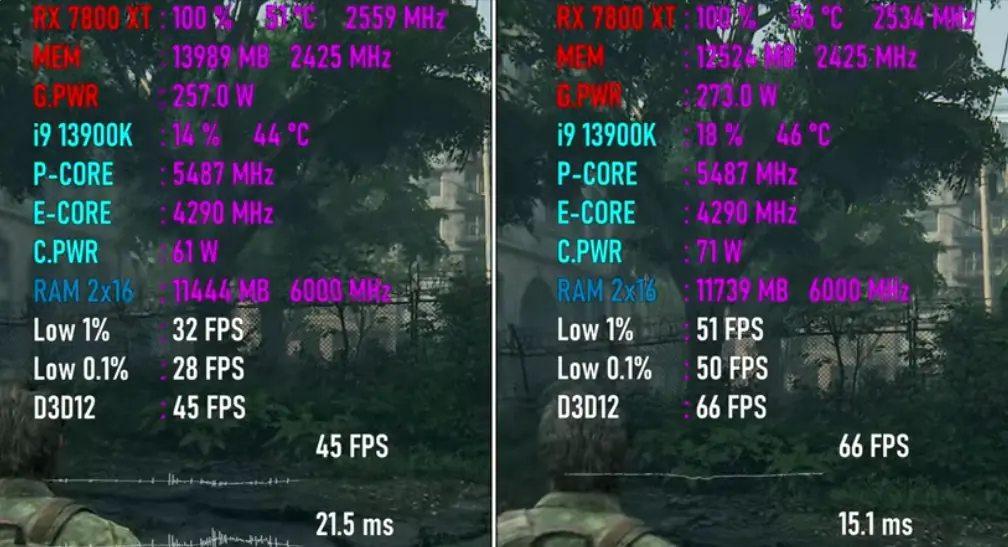

② 4K Graphics Test

Platform A represents 4K native graphics, while Platform B represents 4K with FRS set to quality mode.

Platform A

- Average FPS: 45

- Minimum Instantaneous FPS: 32

- Frame time: 21.5ms

- CPU usage: 14%

- CPU power consumption: 61W

- CPU temperature: 44 degrees

- Memory usage: 11.4GB

- GPU usage: 100%

- VRAM usage: 14.0GB

- GPU power consumption: 257W

- GPU temperature: 51 degrees

Platform B

- Average FPS: 66

- Minimum Instantaneous FPS: 51

- Frame time: 15.1ms

- CPU usage: 18%

- CPU power consumption: 71W

- CPU temperature: 46 degrees

- Memory usage: 11.7GB

- GPU usage: 100%

- VRAM usage: 12.5GB

- GPU power consumption: 273W

- GPU temperature: 56 degrees

In this case, enabling FRS resulted in a 4% increase in CPU usage and a minor increase in memory usage by 0.3GB. GPU usage was 100% in both modes, with VRAM usage decreasing by 1.5GB when FRS was enabled. The performance with FRS was approximately 1.5 times that of the native mode. Power consumption and temperature saw a slight increase, similar to the 1440P test results.

03

Comparative Conclusion Based on RX 7800 XT

From the two sets of tests above, we can roughly conclude the following:

After enabling the FRS feature, performance can increase by more than 20% compared to the native mode. Since multiple games were not tested, this conclusion may not be objective and should only serve as a general reference.

04

Additional Explanation

In various tests shared by the editor previously, readers often question why only 1080P mode is used when comparing the gaming performance of two processors, despite using a mid-to-high-end graphics card, instead of using 4K mode.

To address this question, a careful comparison of the two sets of tests above reveals that when the game switches to 4K graphics mode, the overall CPU usage decreases compared to 1440P mode (and 1080P mode). This indicates that 4K graphics mode relies more on and tests the performance of the graphics card rather than the CPU.

Therefore, if 4K graphics mode is used in the tests, the CPU usage will decrease, which is not conducive to comparing the performance of two CPUs. This is the main reason.

Related:

Disclaimer:

- This channel does not make any representations or warranties regarding the availability, accuracy, timeliness, effectiveness, or completeness of any information posted. It hereby disclaims any liability or consequences arising from the use of the information.

- This channel is non-commercial and non-profit. The re-posted content does not signify endorsement of its views or responsibility for its authenticity. It does not intend to constitute any other guidance. This channel is not liable for any inaccuracies or errors in the re-posted or published information, directly or indirectly.

- Some data, materials, text, images, etc., used in this channel are sourced from the internet, and all reposts are duly credited to their sources. If you discover any work that infringes on your intellectual property rights or personal legal interests, please contact us, and we will promptly modify or remove it.