Digital hardware enthusiasts and gamers all know that video memory (VRAM) capacity is a crucial factor in determining a graphics card’s performance and the ultimate gaming experience.

VRAM is dedicated memory on the graphics card used for storing and exchanging graphic and video data during operation, including frame buffers, textures, geometric data, and more. Insufficient VRAM can cause various serious performance and visual issues in games.

Typical signs of VRAM insufficiency include:

- Reduced frame rates and stuttering during gameplay.

- Visual artifacts like screen tearing, blurriness, missing textures, or incorrect graphics rendering.

- In severe cases, it can cause applications (including games and 3D software) to crash during operation or fail to run altogether, showing error messages.

However, the focus of this article is not on gaming but on AI, where VRAM capacity is also extremely important.

In AI applications, insufficient VRAM can lead to slower training speeds, inability to load complete models, limited batch sizes, and even abrupt interruptions of training tasks. Some AI software might directly report errors like “CUDA out of memory.”

This is why AI users pursue graphics cards with large VRAM capacities and why modified versions of the GeForce RTX 2080 Ti with 22GB VRAM have become highly sought after.

However, increasing VRAM capacity through various modification methods is just a workaround and often comes with certain drawbacks and limitations. The ideal approach is to purchase the latest graphics cards with large VRAM capacities, such as the GeForce RTX 4090 24GB.

For gaming enthusiasts, this problem is solvable by spending a significant amount on a GeForce RTX 4090, though it might be financially strenuous.

AI applications are different; they require not only large VRAM capacity but also immense computational power. For commercial projects, buying a single GeForce RTX 4090 is far from sufficient. Companies like Microsoft and OpenAI purchase A100 professional training cards in bulk.

The high cost of the GeForce RTX 4090 makes it difficult for most small studios and individual AI users to buy more than one or a few units.

Therefore, finding a low-cost way to increase VRAM capacity becomes a critical challenge.

Recently, there’s been good news in this area. A company supported by the Korea Advanced Institute of Science and Technology (KAIST), Panmnesia, has developed a solution to extend GPU VRAM using CXL memory expanders.

To understand this solution, one must first understand “CXL memory.” Compute Express Link (CXL) Memory is a new type of memory based on the CXL standard, using a high-speed interconnect protocol to provide high-speed, low-latency memory access.

This technology allows CPUs and other computing units (like GPUs and FPGAs) to share and access a unified memory space, improving data processing efficiency and overall system performance.

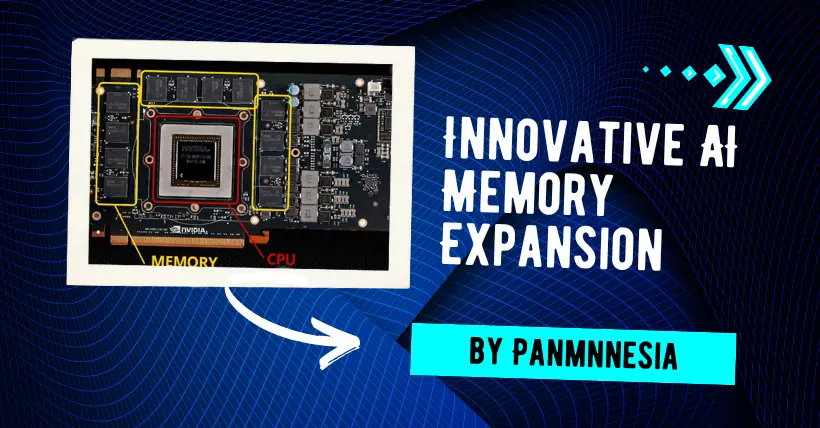

Panmnesia has developed a root complex device compliant with the CXL 3.1 standard, equipped with multiple root ports (RPs) for connecting external memory through PCIe ports and a primary bridge with a host management device memory (HDM) decoder connected to the GPU’s system bus.

The primary bridge decoder manages the system memory address range, “tricking” the GPU’s memory subsystem into “thinking” it is using the graphics card’s onboard VRAM when it is actually using external DRAM or NAND connected via PCIe.

In simple terms, this technology allows for using DDR5 memory or solid-state drives to expand the GPU’s VRAM.

Besides being usable, whether it is “good to use,” particularly its actual performance (mainly latency), is crucial.

According to Panmnesia’s performance test results, this solution (CXL-Opt) is 3.22 times faster than the virtual memory solution (UVM) and 1.65 times faster than another solution (CXL-Proto) developed by Samsung and Meta.

Overall, Panmnesia’s solution shows great potential, especially for budget-conscious small and medium-sized AI developers. However, for widespread commercial adoption, AMD and Nvidia need to add CXL support to their graphics cards.

The most likely future scenario is that AMD and Nvidia will adopt similar solutions, perhaps changing some terms and names, to develop their own solutions.

Related:

- RTX 5060 Ti vs RX 9060 XT: Gigabyte Leak Ignites Hype

- Best Laboratory Silicon Wafer Cleaning Methods Guide

Disclaimer: This article is created by the original author. The content of the article represents their personal opinions. Our reposting is for sharing and discussion purposes only and does not imply our endorsement or agreement. If you have any objections, please get in touch with us through the provided channels.