According to the latest “Enterprise SSD” research report by TrendForce, the demand for AI has surged significantly, leading AI server-related clients to request additional orders for Enterprise SSDs from suppliers over the past two quarters. To meet the demand for SSDs in AI applications, upstream suppliers have accelerated process upgrades and are planning to launch 2YY products, with mass production expected in 2025.

TrendForce reports that the additional orders from AI server clients have caused the cumulative price increase of Enterprise SSDs to exceed 80% between Q4 2023 and Q3 2024.

SSDs play a crucial role in AI development. Beyond storing model parameters during AI model training, including continuously updated weights and biases, another important application is creating checkpoints to regularly save the progress of AI model training, allowing for recovery from specific points in case of interruptions. These functions rely heavily on high-speed transmission and write endurance, which is why clients primarily opt for 4TB/8TB TLC (Triple-Level Cell) SSD products to meet the demanding requirements of the training process.

TrendForce notes that SSDs used in AI inference servers can assist in adjusting and optimizing AI models during inference, especially since SSDs can update data in real-time to fine-tune inference model results. AI inference mainly provides Retrieval-Augmented Generation (RAG) and Large Language Model (LLM) services, with SSDs storing relevant documents and knowledge bases referenced by RAG and LLM to generate responses containing richer information.

Additionally, as more generated content is displayed as videos or images, the corresponding data storage volume also increases. Therefore, large-capacity SSDs like 16TB or higher TLC/QLC (Quad-Level Cell) have become the primary products used in AI inference.

The annual growth rate of AI SSD demand exceeds 60%, and suppliers are accelerating the development of large-capacity products.

In 2024, the AI server SSD market will see a significant increase in demand for products of 16TB or higher starting in the second quarter, as products like NVIDIA’s H100, H20, and H200 series are delivered, leading clients to further increase their orders for 4TB and 8TB TLC Enterprise SSDs.

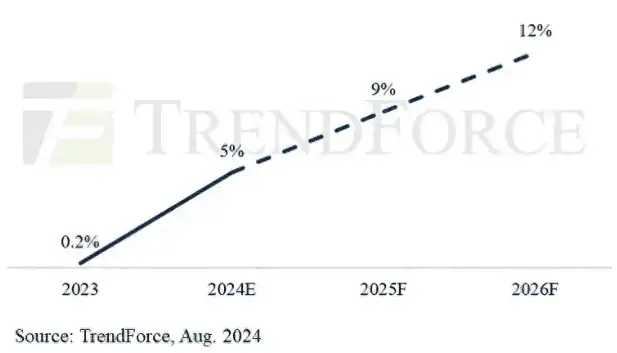

TrendForce estimates that this year, the AI-related SSD procurement capacity will exceed 45EB. In the coming years, AI servers are expected to drive an average annual growth rate in SSD demand of over 60%, with AI SSD demand likely to increase from 5% of total NAND Flash demand in 2024 to 9% in 2025.

On the supply side, as the trend toward using large-capacity SSDs in inference servers continues, suppliers have begun accelerating process upgrades, planning to mass-produce 2YY/3XX layer process products and launch 120TB Enterprise SSD products starting in the first quarter of 2025.

Source: TrendForce

Disclaimer:

- This channel does not make any representations or warranties regarding the availability, accuracy, timeliness, effectiveness, or completeness of any information posted. It hereby disclaims any liability or consequences arising from the use of the information.

- This channel is non-commercial and non-profit. The re-posted content does not signify endorsement of its views or responsibility for its authenticity. It does not intend to constitute any other guidance. This channel is not liable for any inaccuracies or errors in the re-posted or published information, directly or indirectly.

- Some data, materials, text, images, etc., used in this channel are sourced from the internet, and all reposts are duly credited to their sources. If you discover any work that infringes on your intellectual property rights or personal legal interests, please contact us, and we will promptly modify or remove it.