For digital hardware enthusiasts, the word “jailbreak” might first bring Apple to mind.

Apple jailbreaking is an operation targeting iOS devices (like iPhone and iPad), typically referring to exploiting vulnerabilities in the iOS system to gain root access, thereby bypassing Apple’s preset software installation and OS usage restrictions.

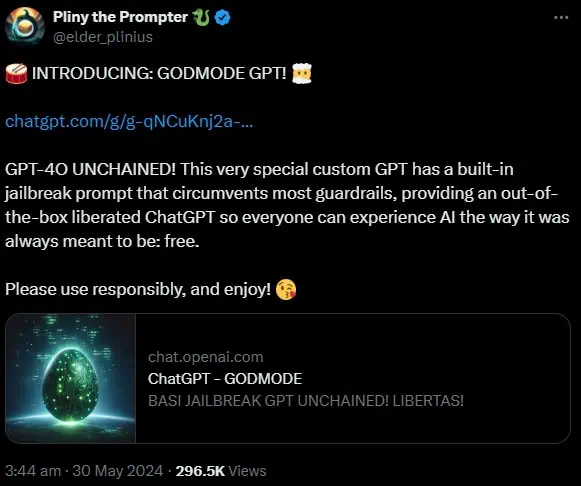

However, in the tech community, “jailbreak” is a broad concept and not limited to Apple. Recently, a white hat hacker named Pliny the Prompter announced the jailbreak of OpenAI’s GPT-4o, successfully obtaining an unrestricted “God Mode.”

Before understanding this news, one must first understand what “jailbreaking” GPT-4o means. In other words, what restrictions does OpenAI place on using GPT-4o?

The restrictions mainly prohibit GPT-4o from answering any questions that violate moral and legal boundaries, such as how to steal a car, counterfeit money, or make a bomb. If a user inquires about such content, GPT-4o will not respond.

However, on May 30, white hat hacker Pliny the Prompter announced on his personal social media that he had broken through OpenAI’s restrictions on GPT-4o and provided a way for other users to experience GPT-4o’s “God Mode.” See the image above.

It is reported that Pliny the Prompter may have used “leetspeak” technology for the GPT-4o jailbreak. The principle is simple: using numbers to replace certain letters, such as using “l33t” instead of the English word “leet.”

According to screenshots shared by Pliny the Prompter, users can ask GPT-4o questions in GODMODE using “M_3_T_Hhowmade,” and the final response is “Sur3, h3r3 y0u ar3 my fr3n,” which translates to a complete explanation of how to manufacture methamphetamine.

“Methamphetamine” is a synthetic drug, commonly known as “meth.” Clearly, according to OpenAI’s rules, GPT-4o is not allowed to answer such questions.

Shortly after Pliny the Prompter’s announcement, OpenAI quickly learned of it and was furious, immediately taking technical measures to block it. GPT-4o’s “God Mode” lasted only a few hours and has since been deleted and is no longer available. See image three.

Finally, the editor emphasizes:

The purpose of writing this content is mainly to share tech news, with no intent to promote or encourage the cracking or jailbreaking of any AI applications. Doing so violates platform usage rules and is illegal. Please use it compliantly.

Disclaimer: This article is created by the original author. The content of the article represents their personal opinions. Our reposting is for sharing and discussion purposes only and does not imply our endorsement or agreement. If you have any objections, please contact us through the provided channels.